EXHIBITION

Type Motion

FACT (the Foundation for Art & Creative Technology), Liverpool

8 January — 18 January 2015

The TYPuzzle system was developed into an interactive installation that was exhiited in FACT's Dev Lab during the latter stages of the Type Motion exhibition. The project clearly resonated with the subject of the exhibition, allowing visitors to engage with designing typographic forms and collaborating in the production of animated type.

Although commissioned for Type Motion, this exhibited iteration of the project was also used as test bed, both in terms of testing the premise of the system as a means of producing animated type, and exploring practical issues of interaction design and user experience associated with developing such a system for public use.

The development of the exhibition installation is documented on the project blog.

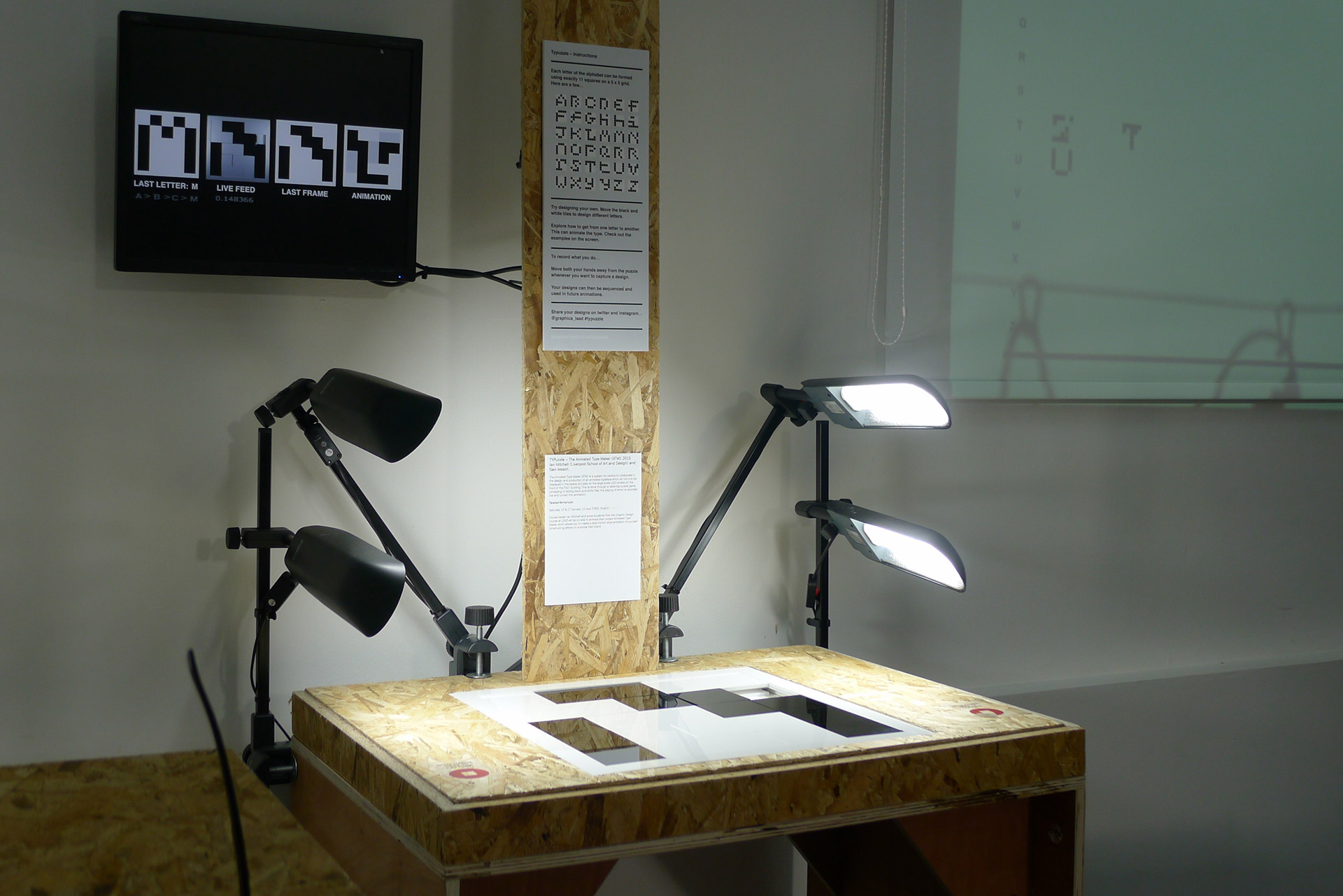

Table-top puzzle game set-up

The Intallation

The installation consisted of:

- a table-top analogue interface of sliding black and white tiles made out of 5mm perspex designed and manufactured in Liverpool School of Art & Design’s FabLab.

- the puzzle interface was housed on a rostrum holding a video camera and lighting. The camera, rigged directly above the interface, recorded the sliding moves as participants played with the puzzle.

- the recording and processing of the video was controlled by the real-time media manipulation software Isadora. This software, developed by north-west based “video smith” Sam Meech, captured small fragments of video each time the puzzle interface was used. A live stream, along with the processed video and a few seconds of animation were displayed on a monitor.

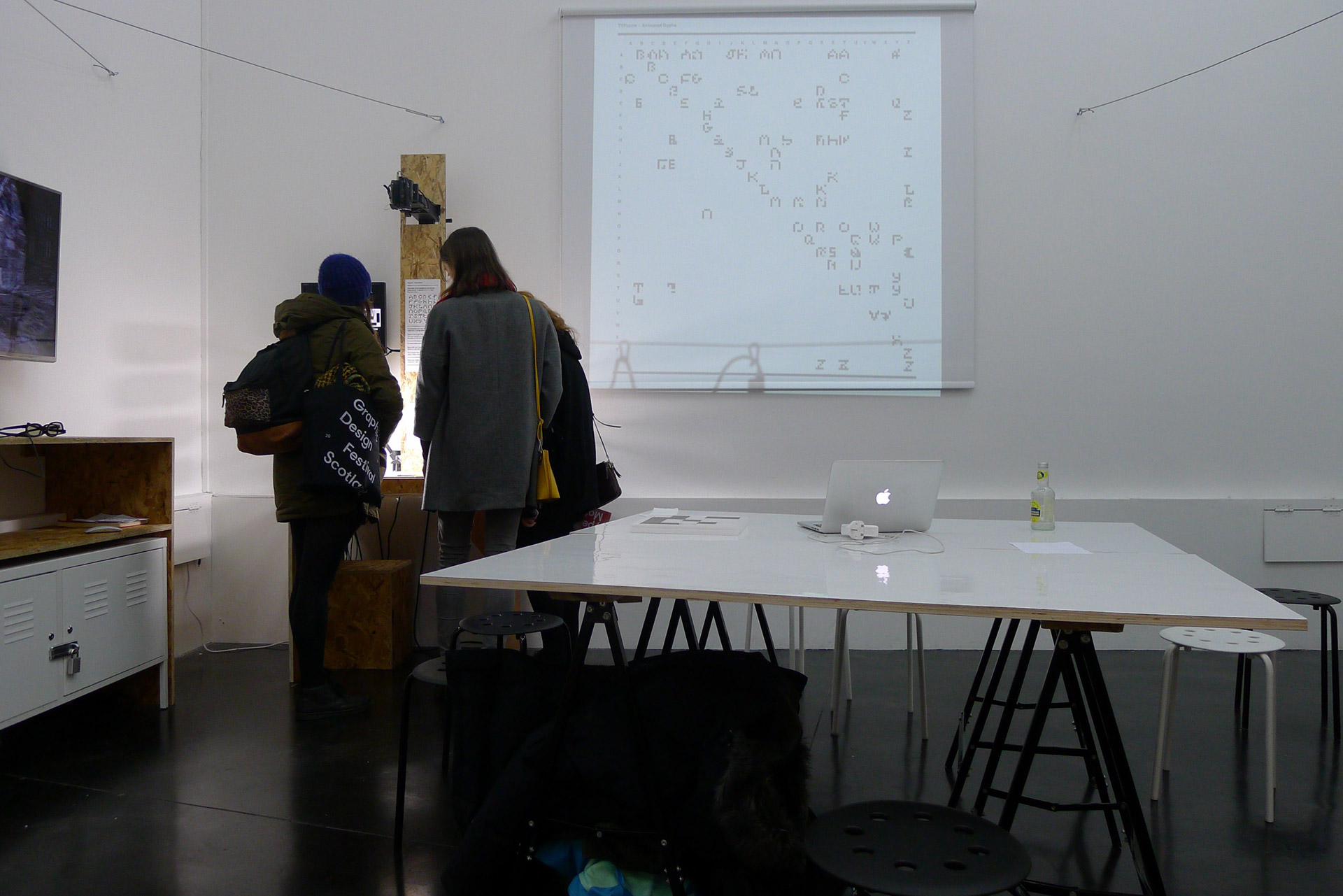

- the captured video was then manually processed using After Effects to produce small looped GIF animations for each transition from one letter to another. These GIFs were then projected in the space via an updatable web browser interface.

- Quicktime sequences were also produced for each glyph transition, which were then used to edit together longer sequences of animated text. These sequences were arranged in various ways and patterns within a 50 by 50 matrix so the results could be displayed on FACT’s external dot matrix video wall.

Timelapse of recordings made of the TYPuzzle installation in use during the Type Motion exhibition.

FACT's external dot matrix video wall displaying the animated type sequences produced by the installation.

How it worked

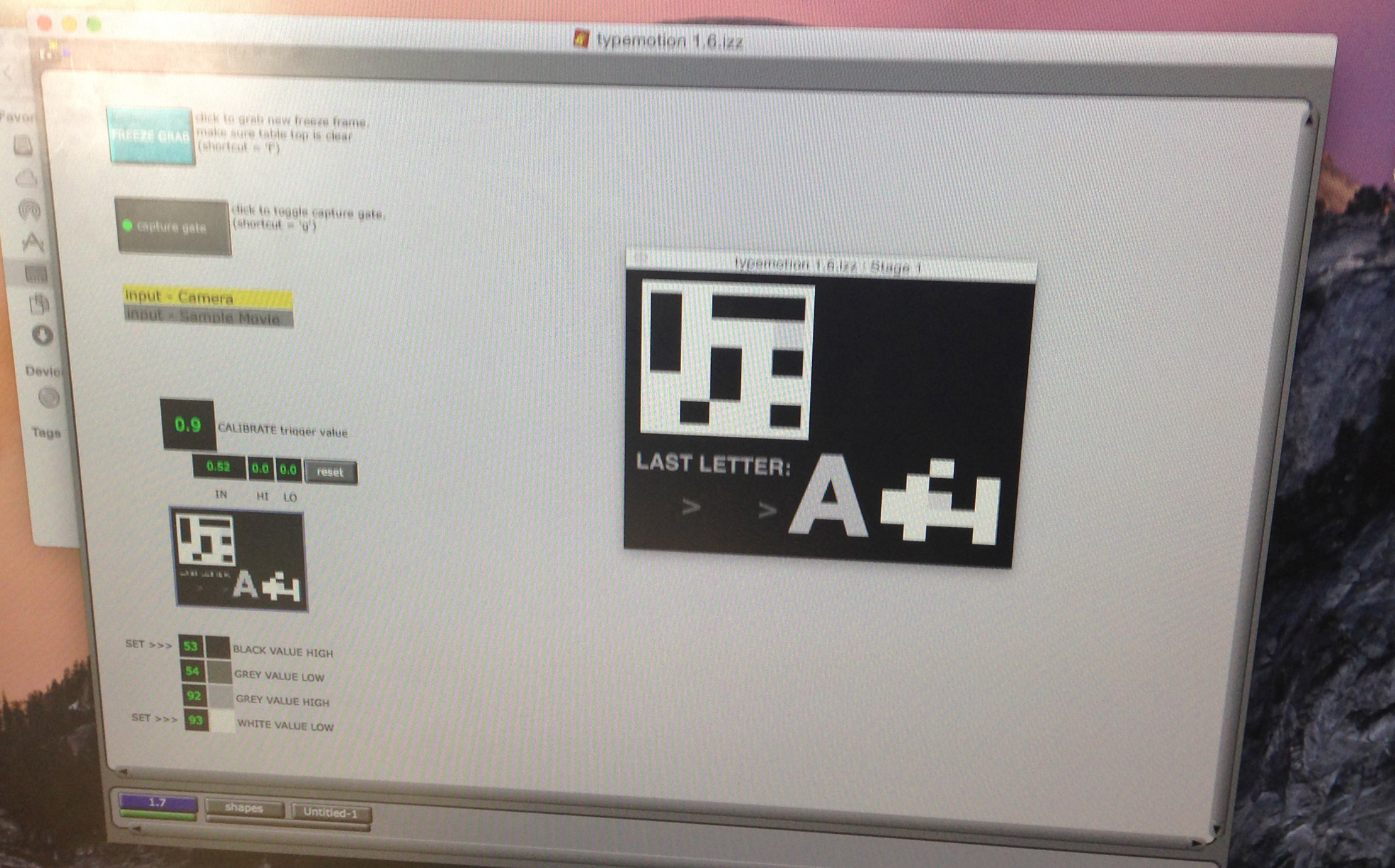

An Isadora apllication was written by Sam Meech to capture very short (less than a second) bursts of video when someone used the puzzle. However the application was designed to capture video when it detected motion stopping, rather than starting, so avoiding capturing peoples arms and hands in the shot. This was achieved by comparing the live video stream with a stored image of the puzzle not in use. Any difference detected prepared Isadora to capture, and then when this comparison dropped below a threshold (in other words there were no hands or arms in shot and everything was still) capturing was triggered.

Under the bonnet. Screen shot of the Isadora application developed by Sam Meech.

The system was designed to be as intuitive and automated as possible. The principle concern of the interaction design and user experience was to remain faithful to the playful nature of the original puzzles, with as few interactive procedures or instructions as possible, whilst producing captured footage that could easily be turned into animated sequences. Therefore, all users had to do to record what they were doing was move their hands away from the puzzle each time they wanted to capture an image.

Limitations

Although an elegant solution there were limitations to this design, which were observed during the 2 workshop days.

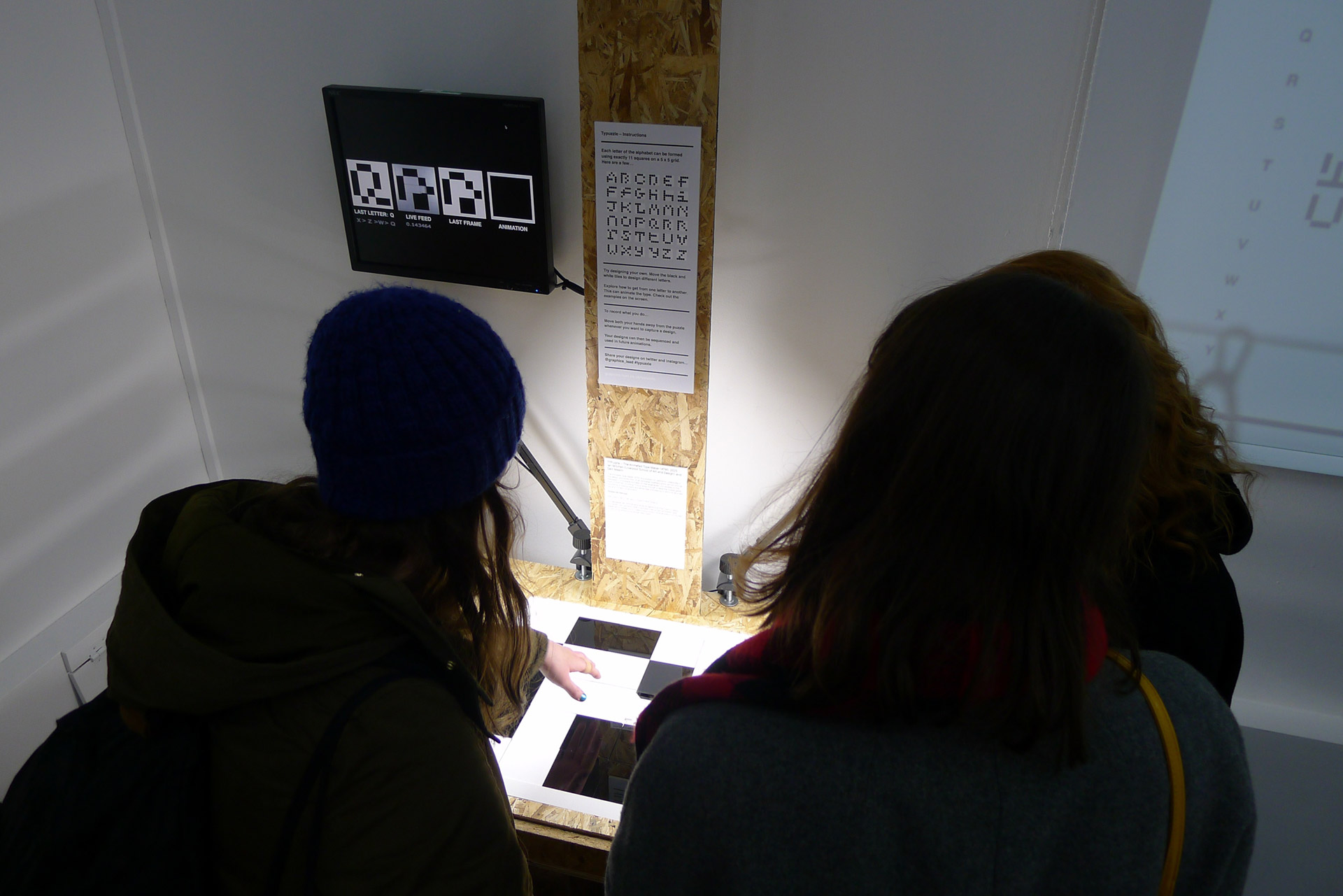

From a technical perspective the detection system proved a little unstable causing footage to be captured at the wrong time – either when hands were in the shot or no interaction had taken place. The application could be reset at this point if the installation was being supervised, however this was clearly not ideal in an exhibition scenario. More significant though were the observations of how people intuitively used the system. People did not want to (or realise that they had to) move their hands out of shot despite some very simple instructions. In some cases peoples’ heads were captured as groups hunched over the puzzle. Although this did produce unwanted results it did also show how engaging and entertaining the system was.

The exhibition confirmed that a more technically ambitious approach would be needed if the installation were to be developed any further, all of which had been considered during the initial proposal stage.

- Mounting the video camera below the table-top puzzle interface could avoid the issue of unwanted obstruction of captured footage. It would require a custom built camera rostrum and a housing system that could be lit internally and give flexible access for servicing. It would maintain the live action quality of the captured footage though.

- Rather than capturing live video, data about the position of each tile could be captured with an Arduino based system with electronics built into the puzzle interface. However the results would have no live action quality and produce very mechanical animation.

- A combination of both these approaches could be taken, where electronics built into the puzzle could trigger the capture of video images from a camera positioned below the puzzle.

Alternatively any future iteration of the installation could focus on the system's ability to capture the design of letter forms rather than to also record the transitional animated sequences, thus simplifying all aspects of the system. More emphasis could be placed on the uniqueness of the modular design system with the development of other grid configurations.

Photographs

The game in use during the workshop days

Looking back at the dot matrix video above the entrance to FACT

Animated type on the dot matrix video wall